If you have a very large site, any SEO audit should include looking at all the pages and deciding whether to keep them, redirect them, merge them, or get rid of them. Also, any audit should prioritize well performing pages that need to be updated, as well as prioritize low performing pages that have a potential to easily gain rankings.

For sites with less than 1000 pages, a common way to do all this would be to connect Screaming Frog with Google Analytics, Google Search Console and Ahrefs, but for large sites, you may not get enough API calls.

We could also use Ahrefs or SEMrush for this, but their stats are never as accurate as Google Search Console and Google Analytics data. I found that the most accurate, practical and flexible way is to import and combine data from multiple reliable sources into a database from which everything can be easily sorted.

This process requires some technical knowledge so it is more of an algorithm than a complete tutorial. I used PHP for parsing and MySQL for DB.Step 1: Crawl the site with Screaming Frog

- Do the typical site crawl without connecting it to GA, GSC or Ahrefs.

- Export all the “internal” results in CSV format.

- Parse the CSV file for the most important fields, such as: URL, status code, indexability, title, word count, inlinks, crawl depth, response time.

- Import all the fields into a DB table, using URL as the Primary key. We will use this key to add all the other data.

Step 2: Import Data from Google Search Console

Google Search Console will only give you up to 1000 results so we need to use Google Looker studio to get all the data.

- Create a new report in Looker Studio and connect it Google Search Console.

- For the report dimensions, use Landing Page.

- For the report metrics, use URL clicks, Impressions, CTR, Average Position.

- Pick the smallest time range that will give you enough statistically significance data.

- Export the report to CSV and update the DB table with the new data (for each URL add clicks, impressions, CTR, position.

- Then create another report, but this time using both Landing Page AND Query as dimensions.

- Export Landing Page + Query data and create a new table with 6 columns (URL as index, Query, URL clicks, Impressions, CTR, Average Position). With the URL and queries combined we can easily identify keywords that would be easy wins to try to rank for.

Step 3: Import Data from Google Analytics

We will use GA to get data about visitors from other search engines and social media.

- In GA 4, go to Engagement, then Traffic Acquisition, then Customize report.

- For dimension we will use Landing Page and Session Source or Medium (depending how specific we want to get with specific social media sites).

- For metrics we probably only need sessions.

- Use the same time period that was used in step 2.

- Export the report in CSV and import it into the database table for every URL, adding “social media” and “other search engines” as a column.

- If the source is Yahoo, Bing, Ecosia, Duckduckgo add their session value to the “other search engines” count.

- If the source is Facebook, Instagram, Twitter…. add their session value to the “social media” count.

Step 4: Add linking data

No source provides the full picture of all incoming external links, so we will use both Google Search Console and Ahrefs

- In Google Search Console, go to links -> External links -> Top linked pages.

- Export that data into CSV and import it into the table with the new column for “GSC links” for every URL found.

- In Ahrefs go to Site Explorer, enter your domain, click on “Best by links”.

- Export that data into CSV and import it into the table with the new column for “Ahrefs links”.

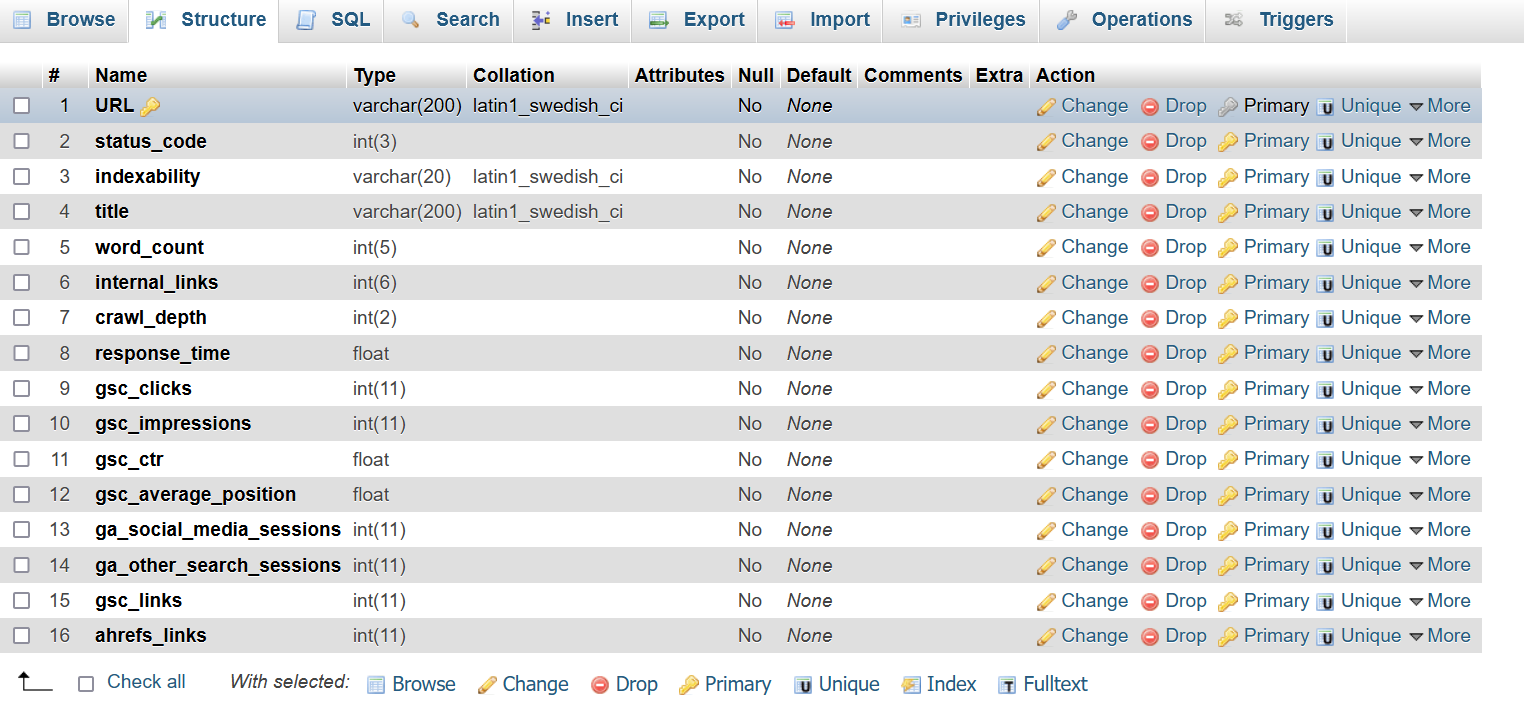

What do we have so far

This is the structure of my table in phpMyAdmin:

- URL – full URL of the page

- status_code – Status code of the page(from Screaming Frog)

- indexability – is the page indexable (from Screaming Frog)

- title – page title (from Screaming Frog)

- word_count – word count of that page (from Screaming Frog)

- internal_links – number of internal links to that page (from Screaming Frog)

- crawl_depth – how many clicks is the page away from the homepage (from Screaming Frog)

- response_time – Response time of the page (from Screaming Frog)

- gsc_clicks – Number of clicks the page received in a time period (from Google Search Console)

- gsc_impressions – Number of SERPs impressions the page received in a time period (from Google Search Console)

- gsc_ctr – Click through rate of the page on Google SERPs (from Google Search Console))

- gsc_average_position – Average position of the page on Google SERPs (from Google Search Console)

- ga_social_media_sessions – visits from social media during the same time period (from Google Analytics)

- ga_other_search_sessions – visits from Bing, Yahoo and other SE during the same time period (from Google Analytics)

- gsc_links – incoming links to that page (from Google Search Console)

- ahrefs_links – incoming links to that page (from Google Ahrefs)

What do we do with all this data

Now that we have all the data we can sort the table in any way we want and decide if it needs a technical fix, update, merge it, redirect it or leave it.

Technical Fix

Now that we have our table we can grab rows that may have technical issues and prioritize them by sorting important columns (such as clicks from Google, or incoming links…). Some of the technical fixes include:

- If a page has a status code other than 200 it may require a technical fix./li>

- Pages that have the slowest response time should be analyzed with third party tools (PageSpeed insights or GTmetrix) for a more accurate assessment

- Pages with Non-Indexable status should be prioritized with other metrics and assessed (check robots.txt, htaccess, X-Robots-Tag, meta robots…)

Redirect the page

If a page has external incoming links (gsc_links and ahrefs_links columns), but few page views, it could make sense to 301 redirect the page to another page with a very similar topic.

Merge the page

If an old page has a decent content, but no external incoming links and a few page views, it could make sense to merge its content with another page with the same topic.

Update the title tag and meta description

If a page has low CTR (gsc_ctr) but high impressions (gsc_impressions) it could make sense to update its title and meta description to make them more “click worthy”. Prioritize fix by impressions count.

Internal linking

Update content with easy wins

In step 2 we created another table with URL (as index), Query, URL clicks, Impressions, CTR, Average Position as columns. We can sort through this table to find keywords to update our content for easy wins.

- Sort the table by Count of rows of queries for each URL with “gsc_average_position>10” and “gsc_average_position<20” and gsc_impressions >10. This will give us a list of URLs that rank for a lot of different queries on the second page. These pages could increase traffic by updating content that includes these keywords for lots of easy wins. Even mentioning the keyword 1 more time could increase page’s relevancy for that keyword.

- Sort the table by Impressions (gsc_impressions) where “gsc_average_position>10” and “gsc_average_position<20”. This will give us a prioritized list of pages where we could add a single keyword in key positions for more easy wins.

Expand content

If a page has thin content (sort by word_count column), but has lots of clicks from organic, it has an excellent potential to rank for other related keywords. It could benefit by adding related content and filling content gaps.

Social Media engagement

If a page gets a lot of visitors from social media (sort by ga_social_media_sessions), it could be worth going into Google Analytics and looking into the exact source of social media visits (whether it is Facebook, Instagram, Twitter)… This would prioritize our engagement with the audience on social media (whether it is responding to comments or posting more content).

Keep the page

If a page is performing well, sometimes it could make sense just to leave as is. Any update just for the sake of update risks throwing off relevancy and should be done very carefully.

All of these steps are just a few examples of what you can do to improve your site if you combine the most relevant data. These examples are mostly content related. In a more technical audit we would import other columns from Screaming Frog, such as HTTPS, canonicals, directives, issues with images, schema… But, we would also use the same stats from Google Search Console and Google Analytics to prioritize those issues as well.